Mentorship becomes valuable when one thing happens:

Feedback changes the work.

If feedback doesn’t improve the deliverable, mentorship becomes just another meeting. If feedback consistently improves output week after week, mentorship becomes the quality engine behind a live project.

Qollabb’s handbook describes mentorship as a structured model that includes expert mentors, structured feedback mechanisms, real-time support, and alignment between academic objectives and industry standards. In other words: mentorship is designed to be operational -not inspirational.

This blog is a practical playbook where you can apply to any live project to make mentorship actually work.

What Mentorship Is Supposed to Do in Live Projects

Live projects aren’t classroom assignments. They’re built around real outcomes, real constraints, and real expectations.

That’s why mentorship matters:

- It keeps the project aligned to deliverables.

- It helps students improve quality through review and iteration.

- It helps universities run projects consistently.

- It helps companies get clearer signals from work.

On Qollabb, mentorship is described as being supported by industry professionals with regular check-ins and feedback on deliverables – so students can refine and improve work overtime.

Why Feedback Usually Fails (Even When Everyone Has Good Intentions)

Feedback tends to fail for predictable reasons:

- It’s too vague. “Make it better” doesn’t create action.

- It comes too late. Feedback in week 4 can’t fix weak foundations in week 1.

- It’s not tied to a deliverable. You can’t improve what isn’t clearly defined.

- It isn’t tracked. Nobody knows what changed and why.

The fix isn’t more meetings. The fix is a clean feedback loop.

The Feedback Loop Playbook (Run This Weekly)

Step 1: Send a “Pre -Review Packet” Before the Check -In

Mentor calls go wrong when the mentor has to “discover” what’s happening live.

Send this 24 hours before the review:

Pre -Review Packet Template

- What changed since last review: 3 bullets

- Current status: on track / at risk / blocked + why

- Deliverable link: current version

- What I want feedback on: 2 -3 specific questions

- Next milestone: what I’ll submit + when

This makes feedback sharper, faster, and more useful.

Step 2: Bucket Feedback into 3 Types During the Review

This prevents overload and confusion.

- Must -fix (blocking issues)

- Improve quality (important improvements)

- Optional (nice -to -have)

Now everyone leaves the review with priorities.

Step 3: Convert Feedback into Actions + Proof

Feedback should translate into visible change.

Use this table:

| Feedback received | What I will change | Where it will show up | Proof it’s fixed |

| “Scope is unclear” | Rewrite problem statement | Intro section | Before/after paragraph |

| “Needs validation” | Add analysis/testing | Results section | Output screenshot/data |

| “Explanation is weak” | Add rationale | Approach section | 3 -bullet justification |

This is the difference between “took feedback” and “improved deliverables.”

Step 4: Use Real -Time Support for Blockers (Fast)

Projects stall when blockers sit unresolved.

A practical rule:

If you’re blocked for more than 24 hours, escalate.

Your message should include:

- what you tried

- what’s failing

- screenshots/links

- what you need next

Qollabb’s mentorship model also highlights real-time support and troubleshooting as part of keeping projects on track.

Step 5: Close the Loop with a Post -Review Summary

After the review, send a summary to lock clarity:

Post -Review Summary Template

- Top 3 action items

- Next deliverable + due date

- What I want reviewed next week

- Any risks (if applicable)

This creates continuity and prevents miscommunication.

What Students Should Do to “Perform Well” Under Mentorship

Mentorship improves quality fastest when students do three things consistently:

1) Bring work early

Feedback works best on drafts, not just final versions.

2) Ask specific questions

Replace “any feedback?” with:

- “Is my approach aligned to the deliverable?”

- “What’s missing for quality?”

- “Is this clear enough to be evaluated quickly?”

3) Show improvement week after week

Mentorship is a performance environment. If your deliverables improve consistently, your project becomes easier to evaluate and easier to trust.

What Universities Can Do to Make Mentorship Consistent

Universities don’t need to micromanage project work. The smart move is to standardize the system.

A simple institutional structure:

- one fixed pre -review packet format

- one fixed post -review summary format

- one weekly cadence for check -ins

- one feedback -to -proof table per week

This keeps mentorship consistent across batches and departments, and it supports alignment between academic expectations and industry standards -something the Qollabb model highlights.

What Companies Can Do to Give Feedback That Improves Outcomes

Companies get better outputs when feedback is:

- specific

- tied to deliverables

- tied to the next milestone

Use this format:

Employer Feedback Template

- What’s strong (2 bullets)

- What must change before final (2 bullets)

- What would make it excellent (1 bullet)

- Risk/concern (1 bullet)

- Next expectation: milestone + due date + output format

This helps students improve faster -and makes performance easier to evaluate later.

Where Professor Shodhak Fits in the Feedback Loop

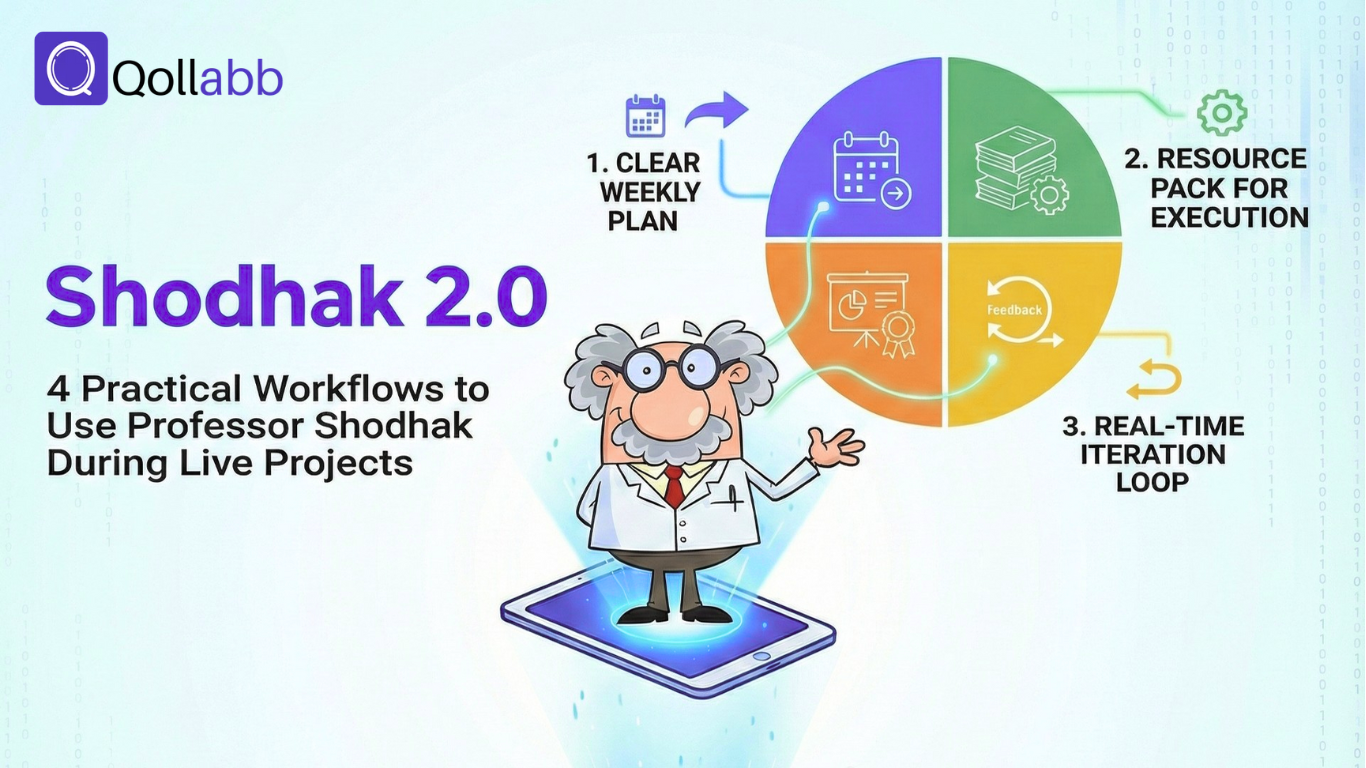

Qollabb’s handbook describes Professor Shodhak as an AI-supported project guide offering personalized guidance, resource integration, real-time feedback, and 24×7 availability.

Used properly, it can support the feedback loop by helping students:

- refine drafts between mentor reviews

- organize project resources

- check clarity and alignment before submitting deliverables

The real win is not “using AI at the end.” It’s using guidance consistently during execution.

Conclusion

Mentorship works when it changes output.

If you run a clean weekly loop -pre -review packet → structured review → action + proof → fast blocker resolution → post -review summary -you’ll get what mentorship is meant to deliver:

better deliverables, week after week.